Guide of Effective Proxies Scraping: Essential Information

Within the current digital landscape, the ability to scrape data effectively can provide a competitive advantage for companies, marketers, and programmers alike. However, gathering data from the web is challenging, especially when it comes to navigating the complexities of proxy servers. Understanding the ins and outs of proxy scraping is essential for anyone who aims to harness the potential of automated scraping.

Whether you're seeking to build a reliable proxy list for your web scraping projects or require tools like proxy checkers and verification tools, knowing how to effectively utilize proxies can be a transformative experience. Starting with distinguishing between HTTP, SOCKS4, and SOCKS5 proxies to assessing the best sources for high-quality proxies, this manual will uncover the secrets of successful proxy scraping. Prepare to learn how to scrape proxies for free, check their speed, and ensure your anonymity while automating various tasks online.

Overview to Internet Harvesting

In the modern digital landscape, proxy harvesting has become an integral practice for web scraping and data collection. As best proxy checker increasing number of businesses and individuals utilize data for making decisions, the need for efficient and trustworthy proxies has surged. By employing proxy scrapers and verification tools, users can access vast amounts of information while maintaining privacy and enhancing their scraping efficiency.

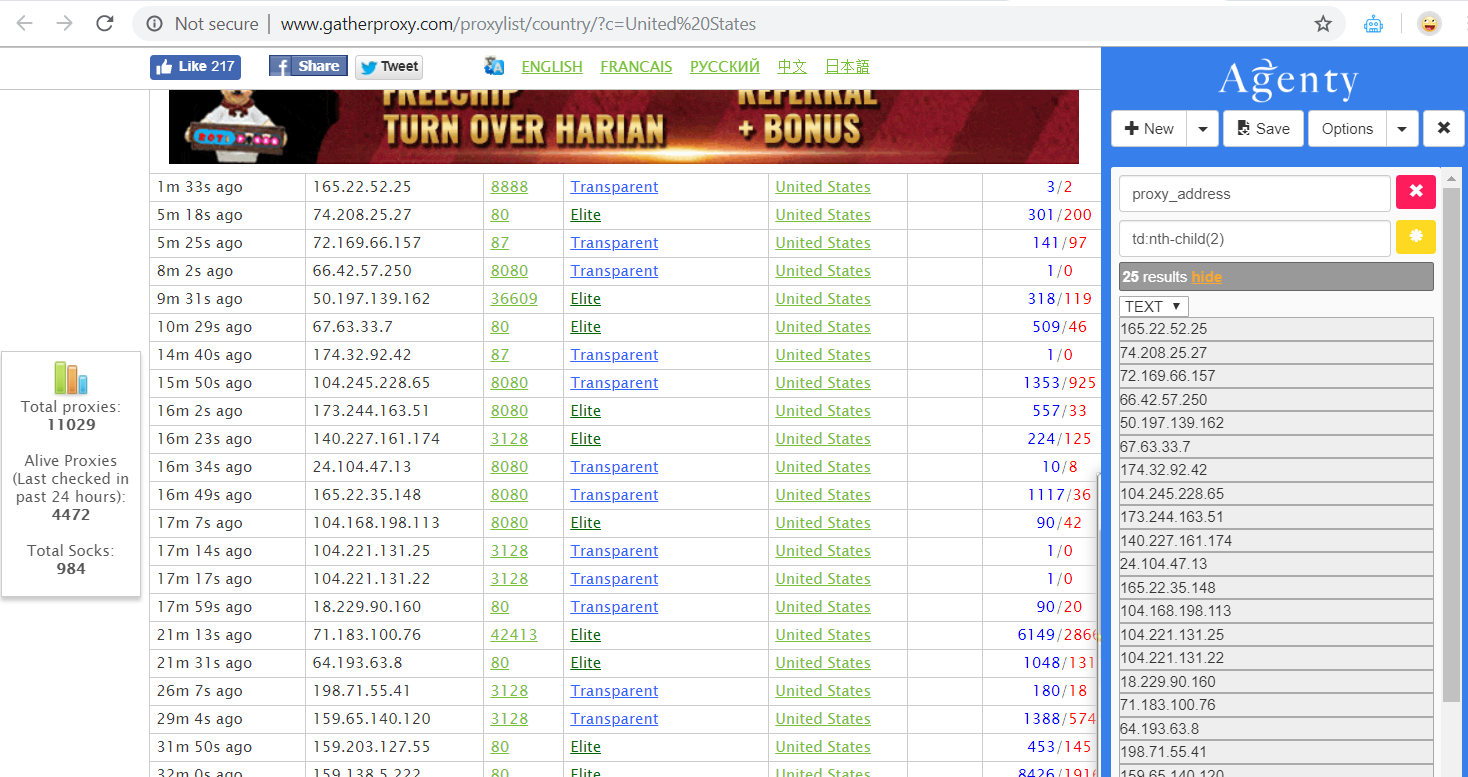

Proxy scraping involves collecting lists of proxies from various sources, allowing users to navigate the internet through different IP addresses. This approach helps avoid detection, manage IP bans, and enhance the complete speed of data collection. Regardless of whether you are employing free proxy scrapers or investing in paid solutions, the goal remains the same: to collect data efficiently and effectively.

Nonetheless, not all proxies are built equal. Understanding the differences between types such as HTTP, SOCKS 4, and SOCKS5 is crucial in selecting the right proxy for your purposes. Additionally, making sure that the proxies are top-notch and suitable for your distinct tasks can significantly impact the success of your automated processes and web scraping activities. With the right knowledge and tools, proxy scraping can unlock new possibilities for data harvesting.

Types of Proxies

Proxy servers come in multiple types, all serving unique purposes in web scraping and internet usage. The primary types are Hypertext Transfer Protocol and SOCKS proxies. HTTP proxies are mainly used for surfing the web and work well with sites that interact over the HTTP standard. They can handle a variety of tasks, including web scraping, but may have limitations when it comes to handling non-HTTP traffic traffic. SOCKS proxies, on the contrary, are more flexible and work at the transport level, making them suitable for a variety of applications, such as torrenting and online gaming.

When considering types of proxies, it's essential to understand the difference between open and private proxies. Public proxies are accessible for anyone to use, often for free. However, they tend to be less fast, less reliable, and riskier in terms of security. Dedicated proxies are dedicated resources assigned to a single user, offering higher speed, anonymity, and reliability. This makes them a preferred choice for activities that require reliable results, such as automated web scraping.

Another important difference among proxies is between transparent, anon proxies, and elite proxies. Transparent do not hide the user's IP address and can be easily detected. Anonymous proxies hide the user's internet address but may reveal that a proxy is being used. Elite proxies, also known as elite proxies, offer the highest level of anonymity, masking the user's IP address completely and making them ideal for scraping data without detection. Understanding these types can aid in selecting the most suitable proxy for your particular needs.

Choosing the Best Proxy Scraper

As you selecting a proxy scraper, it is crucial to take into account the distinct needs of your web scraping project. Diverse scrapers are designed for various tasks, such as gathering data efficiently or providing security. Look for features like speed, the capability to handle different types of proxies, and support with automation tools. A speedy proxy scraper can make a substantial difference in achieving your data extraction objectives without avoidable delays.

One more critical factor is the source of the proxies. Premium proxies result in superior scraping outcomes. Review the proxy list provided by the scraper and verify it offers reliable free and paid options. Some tools concentrate in areas like HTTP or SOCKS proxies, so you may want to choose one that aligns with your targeted scraping method. Understanding the difference between HTTP, SOCKS4, and SOCKS5 proxies can also inform your choice.

Ultimately, think about additional functionalities like proxy testing tools and the option to check proxy anonymity. A good proxy checker will merely test if proxies are operational but will also provide insights into their speed and level of security. By choosing a proxy scraper that meets these standards, you can enhance the efficiency and success rate of your web scraping projects.

Verifying Proxy Efficiency

When utilizing proxies for web scraping, verifying their effectiveness is essential to the success of your projects. A trustworthy proxy must offer not just rapid latency but also a high level of privacy. To verify proxy performance, commence by measuring the velocity of the proxies. Tools like proxy testing tools can help you assess connection times and response time, providing insights on which proxies are the most efficient for your requirements.

An essential aspect of proxy performance is privacy. It is essential to identify whether the proxies you are using are clear, non-identifiable, or elite. Tools designed to test proxy anonymity will measure whether your communication is safe or if your location is exposed. This information can help you pick proxies that meet your privacy needs, especially when scraping sensitive data.

Additionally, tracking the stability and dependability of your proxies is critical. Regular checks will help you detect any proxies that go unresponsive or slow down unexpectedly. Utilize a blend of proxy assessment tools and subscription-based services for the optimal results, as these often have more reliable proxy providers and can generate a consistent stream of high-quality proxies customized for web scraping or information gathering.

Best Tools for Proxy Scraping

Concerning proxy scraping, employing the right tools can make a noticeable difference in efficiency and results. The well-known options in the field is ProxyStorm. Designed with usability in mind, it provides a thorough proxy scraping solution that allows users to amass large lists of proxies quickly. Its sophisticated filtering features enable finding high-quality proxies that are suitable for multiple tasks, whether for web scraping or automation.

An additional standout option is the HTTP proxy scraper, which shines in collecting proxies particularly suited for web applications. This tool enables users to extract proxies from various sources, providing a diverse range of options. By including a strong proxy verification tool, this scraper not only gathers proxies but also checks their uptime and reliability, which is essential for tasks that demand consistent performance.

For anyone looking for a more direct approach, proxy scraping with Python can yield great results. There are numerous libraries and scripts available that can assist in retrieving free proxies from different sources. Using these tools, users can create customized solutions that address their specific needs while gaining insights on proxy quality and speed. This versatility makes Python a favored choice among developers and data extraction professionals seeking tailored proxy solutions.

Paid versus Paid Proxy Services

In the realm of choosing proxy services for web scraping or automation, a key decision arises is whether to use free or paid proxy services. Free proxies may be attractive because of their no charge, which makes them available for individuals looking to start scraping with no financial commitment. Nevertheless, they typically present notable disadvantages, such as slower speeds, higher downtime, and a greater likelihood of being blacklisted. These limitations can hinder your scraping efforts, as the dependability and performance of free proxies are generally inconsistent.

On the other hand, paid proxies provide a higher level of service. They typically provide quicker connections, better anonymity, and a more reliable performance. Premium proxy providers dedicate resources to their infrastructure, guaranteeing that users have access to a dedicated pool of IP addresses. This decreases the likelihood of encountering blocks and allows for a smoother scraping experience. Additionally, many premium proxy offerings provide assistance, which is a vital resource when facing issues during your data extraction tasks.

In summary, while free proxies may work for casual users or small projects, individuals committed to web scraping should think about investing in paid proxies. The benefits of velocity, reliability, and security that accompany paid services can eventually save time and improve the standard of your data collection efforts. For those seeking to guarantee their web scraping is effective and productive, the choice between complimentary and premium proxies is obvious.

Conclusion and Best Practices

In the domain of proxy harvesting, comprehending the details of different proxy categories and origins is crucial for success. Using a mix of trustworthy proxy scrapers and verifiers can significantly enhance your web scraping efforts. Always focus on finding premium proxies that offer fast speed and privacy. Additionally, employing tools like ProxyStorm can streamline the workflow, guaranteeing you have access to current and working proxy lists.

To ensure maximum performance, regularly check the speed and reliability of the proxies you are employing. Adopting a solid proxy verification tool will help you in discarding the slow or failed proxies swiftly. This method not only cuts down on time but also improves the efficiency of your web scraping tasks. Leverage resources that provide reliable updates on the best complimentary proxy sources, allowing you to stay in front in finding suitable proxies for your purposes.

Lastly, whether you choose private or public proxies, it's essential to maintain a balanced approach between costs and performance. For those who are serious about web scraping, securing a good proxy service can generate better results compared to relying solely on no-cost choices. Experimenting with proxy scraping using Python allows for increased personalization and control, making it a popular method for numerous data extraction enthusiasts.